Artificial intelligence boosts super-resolution microscopy

New generative model calculates images more efficient than established approaches

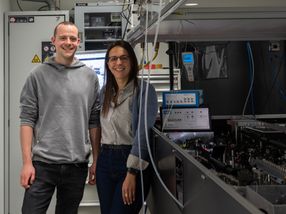

Generative artificial intelligence (AI) might be best known from text or image-creating applications like ChatGPT or Stable Diffusion. But its usefulness beyond that is being shown in more and more different scientific fields. In their recent work, to be presented at the upcoming International Conference on Learning Representations (ICLR), researchers from the Center for Advanced Systems Understanding (CASUS) at the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) in collaboration with colleagues from Imperial College London and University College London have provided a new open-source algorithm called Conditional Variational Diffusion Model (CVDM). Based on generative AI, this model improves the quality of images by reconstructing them from randomness. In addition, the CVDM is computationally less expensive than established diffusion models – and it can be easily adapted for a variety of applications.

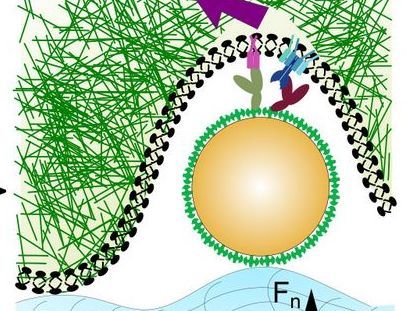

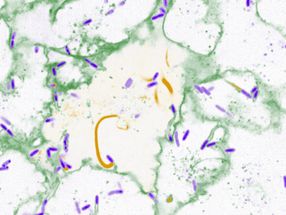

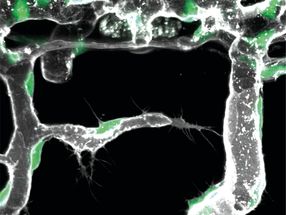

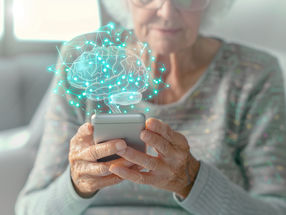

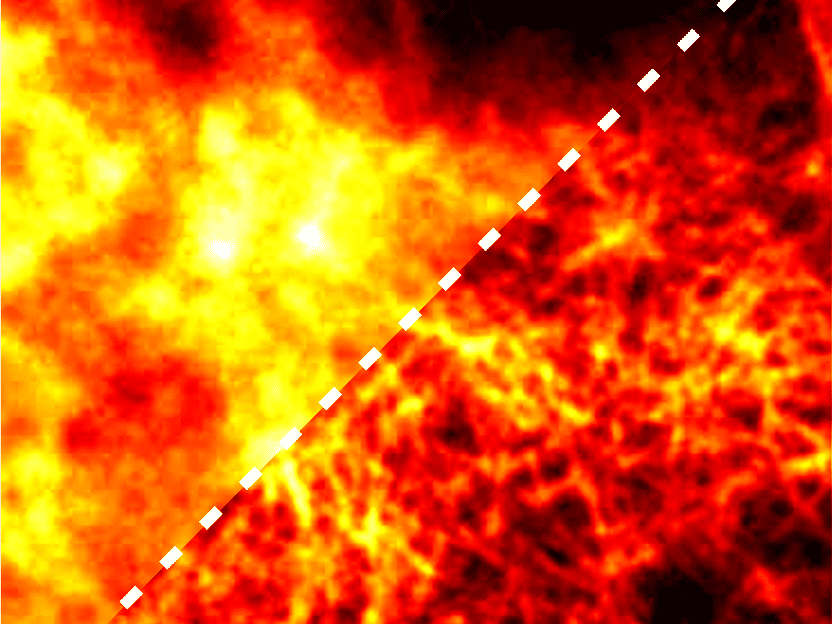

A fluorescence micrograph taken from the open BioSR super-resolution microscopy dataset

Source: A. Yakimovich/CASUS, modified image from the BioSR dataset by Chang Qiao & Di Li (licensed under CC BY 4.0, https://creativecommons.org/licenses/by/4.0/)

With the advent of big data and new mathematical and data science methods, researchers aim to decipher yet unexplainable phenomena in biology, medicine, or the environmental sciences using inverse problem approaches. Inverse problems deal with recovering the causal factors leading to certain observations. You have a greyscale version of an image and want to recover the colors. There are usually several valid solutions here, as, for example, light blue and light red look identical in the grayscale image. The solution to this inverse problem can therefore be the image with the light blue or the one with the light red shirt.

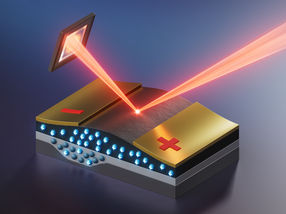

Analyzing microscopic images can also be a typical inverse problem. “You have an observation: your microscopic image. Applying some calculations, you then can learn more about your sample than first meets the eye,” says Gabriel della Maggiora, PhD student at CASUS and lead author of the ICLR paper. The results can be higher-resolution or better-quality images. However, the path from the observations, i.e. the microscopic images, to the “super images” is usually not obvious. Additionally, observational data is often noisy, incomplete, or uncertain. This all adds to the complexity of solving inverse problems making them exciting mathematical challenges.

The power of generative AI models like Sora

One of the powerful tools to tackle inverse problems with is generative AI. Generative AI models in general learn the underlying distribution of the data in a given training dataset. A typical example is image generation. After the training phase, generative AI models generate completely new images that are, however, consistent with the training data.

Among the different generative AI variations, a particular family named diffusion models has recently gained popularity among researchers. With diffusion models, an iterative data generation process starts from basic noise, a concept used in information theory to mimic the effect of many random processes that occur in nature. Concerning image generation, diffusion models have learned which pixel arrangements are common and uncommon in the training dataset images. They generate the new desired image bit by bit until a pixel arrangement coincides best with the underlying structure of the training data. A good example for the power of diffusion models is the US software company OpenAI’s text-to-video model Sora. An implemented diffusion component gives Sora the ability to generate videos that appear more realistic than anything AI models have created before.

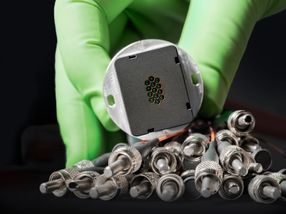

But there is one drawback. “Diffusion models have long been known as computationally expensive to train. Some researchers were recently giving up on them exactly for that reason,” says Dr. Artur Yakimovich, Leader of a CASUS Young Investigator Group and corresponding author of the ICLR paper. “But new developments like our Conditional Variational Diffusion Model allow minimizing ‘unproductive runs’, which do not lead to the final model. By lowering the computational effort and hence power consumption, this approach may also make diffusion models more eco-friendly to train.”

Clever training does the trick – not just in sports

The ‘unproductive runs’ are an important drawback of diffusion models. One of the reasons is that the model is sensitive to the choice of the predefined schedule controlling the dynamics of the diffusion process: This schedule governs how the noise is added: too little or too much, wrong place or wrong time – there are many possible scenarios that end with a failed training. So far, this schedule has been set as a hyperparameter which has to be tuned for each and every new application. In other words, while designing the model, researchers usually estimate the schedule they chose in a trial-and-error manner. In the new paper presented at the ICLR, the authors incorporated the schedule already in the training phase so that their CVDM is capable of finding the optimal training on its own. The model then yielded better results than other models relying on a predefined schedule.

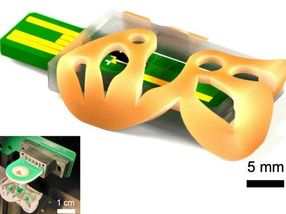

Among others, the authors demonstrated the applicability of the CVDM to a scientific problem: super-resolution microscopy, a typical inverse problem. Super-resolution microscopy aims to overcome the diffraction limit, a limit that restricts resolution due to the optical characteristics of the microscopic system. To surmount this limit algorithmically, data scientists reconstruct higher-resolution images by eliminating both blurring and noise from recorded, limited-resolution images. In this scenario, the CVDM yielded comparable or even superior results compared to commonly used methods.

“Of course, there are several methods out there to increase the meaningfulness of microscopic images – some of them relying on generative AI models,” says Yakimovich. “But we believe that our approach has some new unique properties that will leave an impact in the imaging community, namely high flexibility and speed at a comparable or even better quality compared to other diffusion model approaches. In addition, our CVDM provides direct hints where it is not very sure about the reconstruction – a very helpful property that sets the path forward to address these uncertainties in new experiments and simulations.”

Gabriel della Maggiora will present the work as a poster at the annual International Conference on Learning Representations (ICLR) on the 8th of May in poster session 3 at 10:45. A short pre-recorded talk on the paper is available on the website. The conference is organized this year for the first time since 2017 again in Europe, namely in Vienna (Austria). Whether attending on-site or via video conference, a paid pass is required to access the content. “The ICLR uses a double-blind peer review process via the OpenReview portal,” explains Yakimovich. “The reviews are accompanied by scores suggested by peers; only highly scoring papers are accepted. The acceptance of our paper, therefore is tantamount to high regard by the community.”